If you are an engineer or developer tasked with building embedded systems (or software, devices, networks, etc.), one of your highest priorities is—or should be—identifying and minimizing potential data security vulnerabilities. To effectively meet this goal, you need to understand how systems get hacked, and ultimately understand how to “think like a hacker.”

Hacking is all about exploiting vulnerabilities. These could be design flaws, weak access controls, misconfigurations, or many other issues. This article peeks into the mind of a hacker. As you’ll see, their process and mindset in finding and exploiting vulnerabilities are much different from how engineers approach system development.

What goes on inside a hacker’s mind? It’s an entertaining, chaotic, and extremely volatile place, so bring coffee (lots of it) and let’s dive in.

Hacker Mindset: On a Mission

The most striking difference between hackers and engineers is in how they address a challenge. Engineers (and scientists, for that matter) are systematic. Problems are first defined and analyzed, a plan (or hypothesis) is formulated, and the hypothesis is tested. Results are then analyzed, as to successes and failures, and conclusions are drawn.

This is the scientific method. It has served humanity well for hundreds of years. Hacking subverts this process. First, there is no plan, but rather a mission. Plans for a hacker are loose and flexible. Remember, a hacker is not building something that must stand the test of time. Rather, they are breaking in. They do not need to satisfy a manager or CEO; they only need to complete a mission.

Where engineers are systematic, hackers are pragmatic and “chain reaction” driven in their methodology. There are similarities, but the core difference is that hackers will go to almost any length to accomplish their mission. Moreover, they can discard results that do not help them, rather than having to explain it to others.

This is sometimes described as list vs path thinking:

- Scientists and engineers work against lists of tasks and must report results.

- Hackers follow paths. They will continue to follow a path until it fails to progress them forward on their mission.

In brief, engineers aim to be thorough; hackers aim to be effective. These may seem like minor nuances, but when put into action those nuances have significant implications.

Common Pattern: The Kill Chain

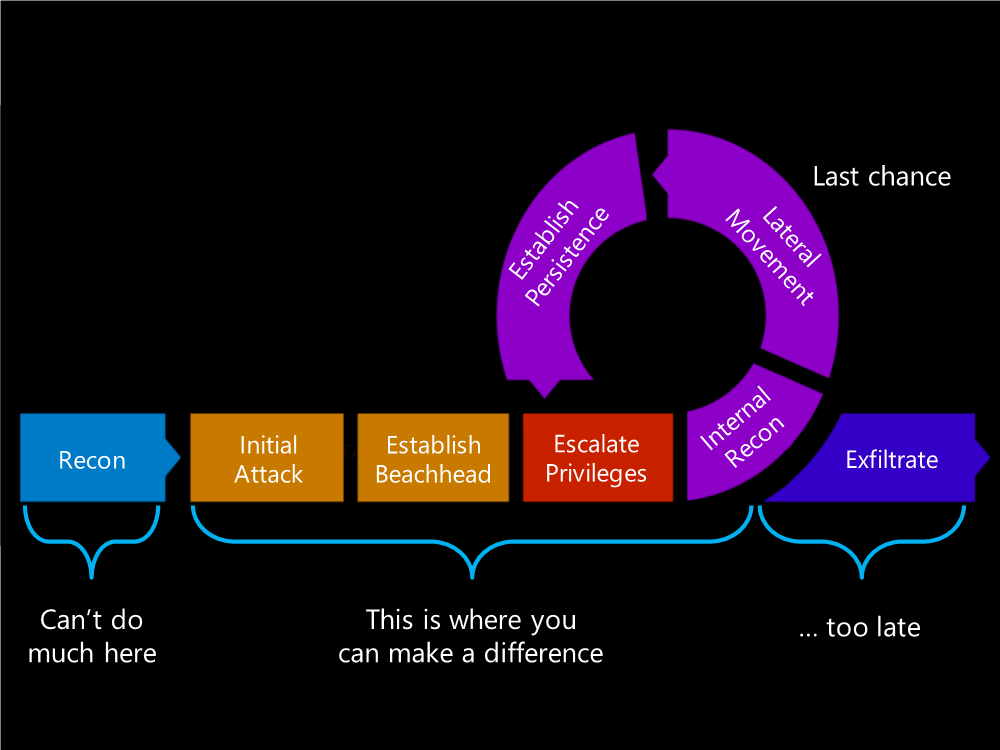

When a hacker is breaking into an environment or system, they typically follow a common pattern, called a kill chain. As the hacker progresses through systems and networks, they will seek out higher degrees of access and authority within the environment (Figure 1). Eventually, when they have sufficient access, they can steal the data they want and/or plant malicious code.

Figure 1: If you can catch hackers early in the kill chain, you can prevent a hack from happening.

Hackers often dwell inside an environment for a long time: 100–140 days on average. The hack experienced by retail chain Target in 2013, for example, took more than 100 days for the hackers to fully execute the hack. If you can catch hackers early in the kill chain, you can prevent a hack from happening.

It is important to note that most hacking is automated using bots. While we may describe these steps in the context of an actual person performing them, bots are what truly do all the real work.

Thinking Hacker

Hackers look at things differently. In particular, they:

- Observe the obvious

- Imagine in the worst

- Explore every potential access point

- Love all things data

- Understand that humans are the weak link

- Love obscure information

- Find and exploit back doors

- Exploit third-party indifference

- Seek credentials

- Pick up your garbage

Observe the Obvious

It is easy to miss a glaring weakness when you are deep in development. Step back from the development to ask yourself some basic questions about your work. Use the “Five Whys Deep” assessment:

- Why does your product do that?

- Why is that necessary?

- Why did you design it that way?

- Why is it good?

- Why not do it differently?

The point of this exercise is to identify obvious weaknesses. A hacker will notice them, much faster than you think.

Imagine the Worst

What is the worst possible scenario? How likely is it to happen? Hackers do not have a moral compass. They will not feel compassion for you when your network or applications are struggling to recover from a disaster. As such, you need to make plans to handle those worse case scenarios.

However, be careful not to get entangled in so-called “zombie scenarios”—that is, disasters that arise due to a ludicrous sequence of events with no response. Most zombie movies are based on this premise.

Explore Every Potential Access Point

You must know every possible way anybody or anything can access your system. A hacker will try all of them, many times. You might think your Bluetooth interface is super secure, but there are dozens of ways to specifically exploit Bluetooth that can render them completely insecure. Make sure you aggressively test every interface, regardless of how obscure you made it.

Love All Things Data

Hackers love data and some types more than others. Data storage is also one of the ways hackers gain persistence in an environment. You must analyze your system’s data:

- How is the data stored?

- How is it transmitted?

- Who can access the data?

- How is that access monitored, managed, and controlled?

- How is access logged? Where does that data go?

Understand That Humans are the Weak Link

Hackers understand that humans are the weak link in data security. Not only are we inconsistent and unreliable, but we are extremely susceptible to manipulation. If your system involves humans in any capacity (which it does), then it has weaknesses.

All information security problems generally boil down to human weaknesses. Whether we misconfigure or poorly code applications, humans produce the weakest link. Assume the users will make mistakes, and a lot of them, so give human touchpoints extra attention.

Love Obscure Technical Information

Hackers love obscure technical information and will dig up a random document you put in Pastebin years ago to use that document’s data against your system. This is part of the fun of hacking new systems.

Be cautious about what types of technical data you release into the public. Assume the hackers will get it and analyze it. If you have a product that is being developed in an open environment, then be extra diligent in designing components and features in a secure manner.

Find and Exploit Back Doors

Many hackers got their first taste of hacking from the movie Wargames in the 1980s. There is a great scene midway through the movie where a computer scientist rebukes his nerdy colleague for thinking of backdoors to computer systems as secrets: “Mr. Potatohead! Back doors are not secrets!”

His words are just as true today as they were then. Backdoors into applications or devices are common, and hackers will look for them. It is one of the oldest and most reliable techniques to hacking into a system. It worked in Wargames, and it still works today.

Exploit Third-Party Indifference

While you might deeply care about the security of your system, do your suppliers or partners have the same level of concern? Hackers routinely target third-party components because they can attack a broad set of targets with a single technique. Heartbleed was a perfect example of the danger of insecure third-party components. Heartbleed was a flaw in the OpenSSL implementation. OpenSSL is inside millions of products. That means one vulnerability left millions (probably billions) of devices vulnerable to attack.

If you integrate a third-party component into your system, you inherit all the weaknesses of that component. While the product may belong to somebody else, you will be responsible for its security.

Seek Credentials

Legitimate user accounts are ultimately what hackers want. Once they have credentials, hackers can escalate their privileges and then move through your system. Moreover, use of legitimate credentials does not usually raise alarms.

While you may not be able to protect user credentials all the time (as they are in the hands of humans), you can still prevent those credentials from being used maliciously. This begins with implementing least privilege rights—that is, users must never have any more access than they need. Furthermore, you should aggressively test systems against privilege escalation attacks.

Pick Up Your Garbage

Is your system part of a larger whole? Could blinding one part of the system leave other parts open for attack? What about feeding your system false data? This was how the Stuxnet malware worked. It fed false information to industrial control systems and then overloaded them. If a hacker wants to steal data from you, or disrupt operations, it may be as easy as overloading your system with too much network traffic.

Denial of service attacks are difficult to stop. When designing your system, you must consider how it could potentially be overloaded and build in mechanisms to either stop or ignore overwhelming amounts of information. Moreover, it is important to always validate that data sent to your system is coming from a trusted source.

Conclusion

As a design engineer, identifying and minimizing potential data security vulnerabilities are primary goals. Hackers approach their work much differently than engineers do; rather than taking a systematic approach, they prefer a kill chain approach where they incrementally and persistently look for vulnerabilities to exploit.

“Thinking like a hacker” requires you to look at the systems you design differently. Part of this means understanding the technical aspects of vulnerabilities and solutions; however, a larger part requires observing the obvious, understanding human errors and indifference, and understanding what hackers seek and the clues they use.

NOTE: This blog was original published at Mouser Electronics Blog.